Two companies that recently integrated with Switch proved to be a challenge different from all the previous providers. Not only did they communicate using sockets, but they also demanded that only one socket should be used for all the messages, and had to be active 100% of the time, processing transactions.

This may seem like a lot of pressure for a socket, and that’s because it really is. We extensively studied the initial integration of these providers and implemented improvements to the initial implementation. Let’s discuss how exactly we did that.

Multiple Hosts

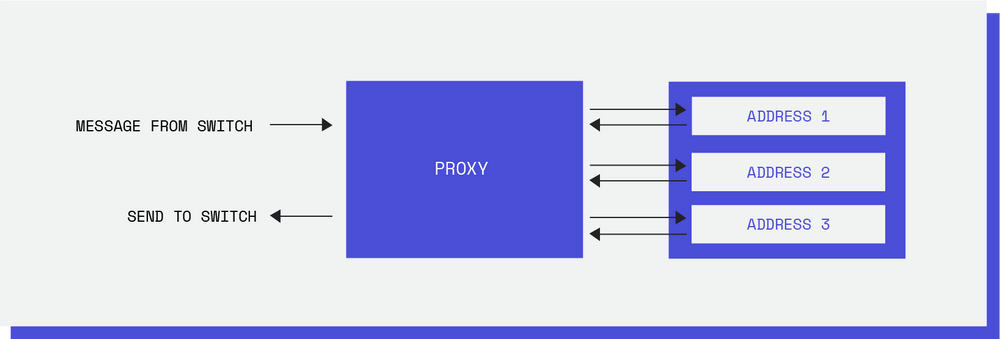

One of the first things we noticed was that both the providers were only communicating using one address, specified by an IP address and a port. This could easily be improved once we realized there were multiple addresses with which we could communicate. It was possible to delegate the work and even parallelize it.

Unfortunately in the first implementation, we assumed there would only ever exist one address and therefore one socket, making it completely plausible to create a singleton. So after receiving the address list as an argument, refactoring the singleton, and creating a specific class to handle all the sockets, we were finally communicating with all the addresses.

This feature had the big advantage of supporting single address failure, which unfortunately happened quite often. But, we could explore this functionality to a greater extent. Why should a client have to wait for a request that was made to a different address than the one it was communicating with? Or even to the same address? That is when we realized, it was time for threads.

Making it fast and scalable. Time for threads.

At the start of this task, some assumptions were made that would be disproved after taking a closer look at the code, one of the most important ones being that the two providers could handle concurrent requests. On the other hand, when I started testing and printing everything thoroughly, so I could understand the code, all the lines were in order. No chaos whatsoever! And that could only mean the code was not implementing threads, and that when the number of clients started to increase, some problems could emerge.

The solution was very simple. The only problem was that the previous programmers had used a TCPServer, which provides for continuous streams of data between the client and the server, and if we wanted asynchronous behavior, we needed to change it to a ThreadingTCPServer. Voilà! One line that changed everything!

We now had asynchronous requests, but nothing is that easy with threads. After creating a script that could truly test concurrency, problems started to emerge. But nothing some good old locks could not handle.

Making it fast and scalable. Handling reconnects.

When we are communicating with a single address, if it fails it means some clients are losing their transactions. Therefore, the implemented solution was a while loop that would try to reconnect every 0.1 seconds, blocking all future transactions.

Although this solution made sense, when we have multiple addresses we can simply reconnect it in their own thread while the others continue to work. So the new solution reconnected a pre-defined number of times after a failure message was sent to Switch so the client wouldn’t have to wait for its payment to finish. If the reconnect fails even after those reconnect tries, it will try to reconnect every time it receives a new message.

If all the addresses failed, then it would try to reconnect all the addresses once, and every time it received a request.

Additional Features

Along with the aforementioned features, this project allowed us to identify other problems in the code. Basically one of the providers was consuming a huge amount of memory and CPU and after some examination with the Infrastructure Team, it was discovered that in one of the peer-to-peer IPsec VPN connections, both nodes were trying to be the active one, creating hundreds of unnecessary tunnels and causing huge spikes. Nothing a DevOps master and some emails cannot solve.

Other minor features are also worth mentioning, such as a small modification to the Dockerfile, which was killing idle TCP connections making it reconnect every 5 minutes, the creation of an interface so it was possible to reuse more code and even a declined pull request.

In conclusion, it was possible to improve the initial solution quite substantially and even understand better which were the main problems with the previous implementation. I believe this research will also help Switch integrate new providers with more confidence, providing a more efficient and robust solution to our clients.